Multihop FCoE 102: VN_port proxy and FIP snooping

A few weeks ago I wrote about the multihop FCoE basics and the two fundamentally different ways an FCoE network could be designed: FCoE on every switch or FCoE on the edges with DCB-extended bridging in the middle.

There are two other configurations you’ll likely see in access parts of an FCoE network: FCoE VN_port proxying and FIP snooping.

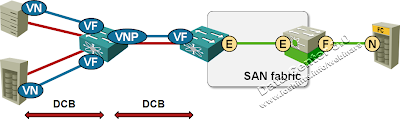

FCoE VN_port proxy

This design is very similar to the traditional FC N_port proxying (using NPIV functionality). The FCoE switch presents VF_port to the FCoE clients (servers or storage) and pretends to be a client (VN_port with NPIV) to the rest of the fabric.

The N_port proxying has a few clear benefits that the vendors will be quick to point out:

- The proxying switch does not participate in FSPF routing, reducing the size of the core SAN fabric;

- The proxying switch does not have FC domain ID (remember: switches are called domains in FC lingo), allowing you to build larger networks when the other FC switches in your network support ridiculously small networks (supposedly you could be limited to 16-switch SAN networks).

Vendors like N_port proxying for more mundane reasons. Instead of having to code the full FC switch functionality they can get away with the minimum subset supporting only the N_ports, F_ports and NPIV (N_port ID virtualization). The implementation versatility might vary, but I would be surprised if you could use a proxying switch on its own (without being connected to a full-blown FC switch).

Last but not least, the proxying approach has a few drawbacks that you’ll rarely hear about. It’s a static routing in disguise; sessions going through an NP_port cannot be rerouted in case of port or switch failure. The NP_port is thus a single point of failure.

Remember: You should use NP/VNP_ports on your FCoE switch in combination with multipath (MPIO) FC sessions from your servers (Enodes), ensuring that the sessions go through different NP/VPN_ports of the FCoE switch.

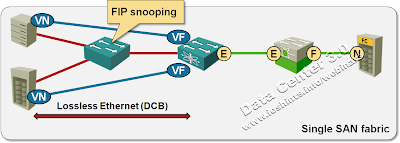

FIP snooping

Have you ever designed a network where multiple servers were exchanging traffic over a shared Ethernet LAN? I know you have. Have you worried about the security implications of a shared medium? I’m positive you have and decided segmenting servers into VLANs based on their security zones was good enough. Obviously storage is special ... VLAN segmentation is no longer sufficient; if the FCoE clients are not attached directly to an FCoE switch you should implement dynamic MAC access lists on the intervening switches.

The intervening switches should snoop on FIP (FCoE Initiation Protocol) traffic, figuring out which Enode (server/storage) has logged in to the FC fabric through which FCoE switch and dynamically building MAC access lists limiting the propagation of FCoE traffic (you’ll find in-depth description of FIP snooping in the Define the Cloud blog).

Do you think FIP snooping is an overkill? I do (more so if you use FCoE from ESX/vSphere servers, not from virtual machines or bare-metal servers), and so did the FCoE committee that made FIP snooping an optional part of the FCoE standard (informative Annex C to FC-BB-5). If, however, your switching hardware already supports snooping (and some other vendors still try to catch up), it’s a nice differentiating factor for your marketing department.

More information

You’ll get a high-level overview of all major storage protocols (including FC, FCoE, iSCSI and NFS) in my Data Center 3.0 for Networking Engineers webinar (buy a recording or yearly subscription).

Joe Onisick did a deep dive into FIP and FIP snooping.

Scott Low wrote a nice series of posts describing Network Interface Virtualization and the interaction between FCoE and NIV.