Why Do We Need Session Stickiness in Load Balancing?

One of the engineers watching my Data Center 3.0 webinar asked me why we need session stickiness in load balancing, what its impact is on load balancer performance, and whether we could get rid of it. Here’s the whole story from the networking perspective.

Update 2017-03-22: Added link to PROXY protocol which can be used to pass original client IP address to a web server even when SSL connection is terminated on the web server.

What Is (Network) Load Balancing?

Load balancing is one of the dirty tricks we have to use because TCP doesn’t have a session layer (yes, I had to start there). It allows a farm of servers to appear to the outside world as a single IP address.

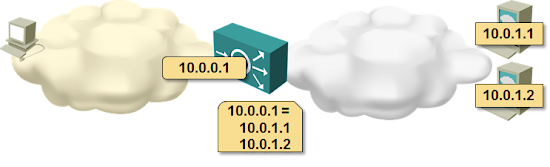

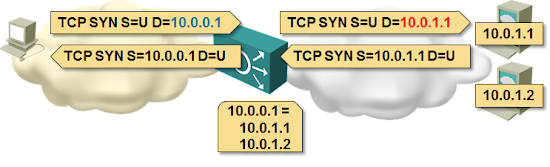

There are numerous ways to make this trick work; the most common one involves network address translation (even in IPv6 world)

Whenever a client tries to open a new session with the shared (aka outside or virtual) IP address, the load balancer decides which server to use to serve the client, opens a TCP session to the selected server, and creates a NAT translation entry translating TCP session to virtual IP address into a TCP session to server’s real IP address.

In this scenario the return traffic MUST pass through the load balancer – the load balancer is the only point in the network that knows how to translate the “inside” TCP session into the “outside” TCP session.

You can use routing to push the return traffic through the load balancer (load balancer is the default gateway for the server farm) or source NAT where the source IP address of the original client is replaced with the load balancer’s IP address, ensuring the return traffic always goes through the load balancer.

Source NAT hides client’s real IP address from the servers, making it impossible to use geolocation or IP address-based actions (like user banning). A load balancer could insert the client’s real IP address into the X-forwarded-for HTTP header, which obviously doesn’t work if you want to use TLS (aka SSL) all the way to the web servers. You can, however use the PROXY protocol in that case assuming your web server supports it (thanks to Lukas Tribus for pointing that out).

Want to know more about load balancing mechanisms, from application-level to anycast and network appliance approaches? You’ll find them all in the Data Center 3.0 webinar.

What Are HTTP Sessions?

HTTP (in all its versions) has a fundamental problem: it’s stateless. Every single request sent by the client is totally independent and not linked to the previous requests, making it impossible to implement web site logins, shopping carts…

Well, since all the features I mentioned in the previous paragraph work, there must be another trick involved: HTTP sessions implemented with specially-crafted URLs or session cookies:

- Whenever a new client visits a web site, the web server creates a session for the new client, and sends the session identifier (usually in an HTTP cookie) to the client;

- As the web server processes client requests, it stores per-client state (username, preferences, shopping cart contents…) in session variables. These session variables are automatically saved by the web server and restored when the client makes the next request based on client’s session cookie.

What Are Sticky Sessions?

Using the default configuration many scripting languages (including PHP) save session data in temporary files residing on the web server. The client session state is thus tied to a single web server, and all subsequent client requests must be sent to the same server – the HTTP sessions must be sticky.

The load balancer could implement session stickiness using additional session cookies generated by the load balancer or by caching the mapping between client IP addresses and web servers.

In the old days of short-lived HTTP sessions, the state generated by session stickiness was a major nightmare – the number of clients that had to be cached was an order of magnitude (or more) larger than the number of active HTTP sessions.

With persistent HTTP connections the difference between the number of active TCP sessions and the number of clients probably becomes negligible (or at least smaller). Would appreciate real-life data points; please write a comment.

Want to know what persistent HTTP connections are? I explained them in the free TCP, HTTP and SPDY webinar.

Do We Need Sticky Sessions?

Short answer: NO!

Every web server scripting environment I looked at has a mechanism to store client session data in data store shared among all servers, making session stickiness totally unnecessary. Using a shared data store any web server can retrieve the client session data on demand, so there’s no need for load balancer to track client-to-server mappings.

Added 2017-03-28: Some readers understood the shared data store to mean transactional database like MySQL. You should use a solution that meets your consistency requirements – in most cases memcached is more than good enough.

The only reason the scripting languages (PHP, Python…) store session data on the local web server (most often in the local file system) is because the local file system is always available and thus requires no configuration – the installation will work even when the web server admin can’t read.

To make matters worse: it takes one or two lines of web server configuration to enable the shared data store, but because “it cannot be done” in many cases the networking team buys yet another expensive load balancer giving CIO another reason to complains about the costs of data center networking.

Even More Load Balancing

I described how you can use load balancing to implement active/active data centers in Designing Active/Active Data Centers webinar, and Ethan Banks described his hands-on experience in autumn 2016 session of the Building Next-Generation Data Center webinar (you get access to recordings of autumn 2016 session as soon as you register).

That way, the load-balancer does not maintain state, and if you need to scale horizontally, you can ECMP in front of it.

As per passing the source IP to backends when HTTP doesn't allow it (for example when using end-to-end encryption), you can use the proxy protocol [1], supported by haproxy, nginx, varnish, apache, et all.

[1] http://git.haproxy.org/?p=haproxy.git;a=blob_plain;f=doc/proxy-protocol.txt;hb=HEAD

It depends on what your acceptable failure (= losing session state) rate is, how often the topology changes, and whether you want to have load balancing beyond simple round-robin (or in your case hash-based).

Based on my limited experience people who can't implement Memcached for PHP cookies are usually also thinking about overly-complex load balancing schemes.

And thanks a lot for the link to the PROXY protocol. I love to be proven wrong and pointed toward interesting new solutions.

I noticed Amazon ELB now supports the HAProxy proxy protocol which is great news..

But I still think layer 7 load balancing sucks [1].

[1] https://www.loadbalancer.org/blog/why-layer-7-sucks

1) As most browsers open multiple 'parallel' connections to a single (V)IP/site, I wonder if the complexity introduced from a troubleshooting, tracing and monitoring POV are worthwhile. This might inform your thoughts around TCP and HTTP session equality btw.

2) Ditto for the overhead of multiple servers being required to perform lookups against the shared state 'database'. Presumably they would have to do so for every request in case the state had changed (via a connection on another server). This obviously won't be an issue with HTTP/2.0 use.

3) The load balancer is required (for just the load balancing) anyway (although there are other ways) so why not avoid issues 1), 2) and 4) above and below. Perhaps the ability to not lose state when a server fails is enough of a benefit?

4) The availability of the shared state 'database' itself needs to be addressed. So more HA considerations, more complexity, etc. especially considering 3).

5) The ridiculous and expensive load balancer option is taken because that's what the CIO/org demand and allow themselves to be sold, not because it's what's needed. That's a whole different subject and problem. As per 3) it would be there anyway and of course, all vendors will take full advantage.

In summary, yes I agree, it's not required but I also think moving the solution elsewhere isn't necessarily worthwhile, at least if you have a LB anyway. "Most of the time, it depends" and all that.

A genuinely thought provoking and informative piece, many thanks.

#1 - Out of the 100+ requests needed to build a web page (yeah, sad statistics), often only one is handled by a script that touches session state. Most others are static resources. See http://httparchive.org/interesting.php for details (and keep in mind that "scripts" is JavaScript and "HTML" is what is produced by a PHP/Python/whatever script)

#2 - A single Memcached instance can handle 200K requests/second. https://github.com/memcached/memcached/wiki/Performance

#3 - There's a slight (cost) difference between running HAproxy or nginx and F5 LTM.

#4 - I would hope that a well-written web application caches all sorts of things anyway, so you have the caching tier up and running anyway. I know that's often not the case though...

#5 - In some cases you're forced to buy the ridiculously expensive load balancer because that's the only way to make ****lications work. OTOH, I know organizations who bought expensive load balancer instead of using open source software so they'd have someone to sue when it fails. At least some of those same organizations use open source software to run the applications. Go figure...

Just one remark regarding X-Forwarded-For with SSL/TLS all the way to the servers: it's actually possible with SSL-Bridging on the load-balancer; whether it's good or not remains to the trade-offs...

Deviating somewhat from the topic of session stickiness and swinging to the question "why would you buy an expensive LB". I'd say there are reasons beyond just distributing traffic to backend nodes that prompt people to spill $$$.

LB, or rather "ADC" can, and often does, provide a bunch of additional functionality that either doesn't belong in the app, or is shared. For example, generating server response performance metrics feed for your ops, URI request routing, WAF/DLP, SSO, etc.

[shameless plug]I wrote a blog not too long ago that gives an example of using in-line request and response processing to "integrate" single page app with static object store and a 3rd party web app (wordpress blog): https://telecomoccasionally.wordpress.com/2016/12/06/running-a-single-page-app-and-cant-wait-for-lambdaedge-heres-an-alternative/ [/shameless plug]

On apps using opensource: you're supposedly setting up an LB to protect your app users from backend failures, which is what your LB is there for. In my book this means that LB's availability is more important than that of an individual scale-out backend server. Which means that you may see risk profiles differently, and be more willing to pay for one and not the other.

Just my 2c.

Thanks.

With regard to the SSL offloading, there is probably a balance point where the hardware solution can be cheaper. On the other hand, the extra scalability and availability of a cloud/container setup with (loads of) open source software load balancers somehow appeals to me.

Yes, memcached/Redis/[other Out-Of-Process storage] can be fast, but sometimes you need faster.

The next configuration is absolutely viable:

1. Nginx (or haproxy) with sticky sessions

2. A lot of lightweight appservers working mostly with their local in-process cache

3. Shared session storage as failback for cases of local cache miss

Without sticky sessions appservers will allmost ever use shared storage.

Although I must admit:

a) Such configuration is obviously more complex and can not be recommended for all cases

b) I know nothing about "expensive load balancers" - I'm talking about nginx, haproxy and .. IIS (wow! I've remembered about IIS just now)

So .. sticky sessions have their uses.

However, if you care enough about performance to consider impact of memcached lookup versus in-process memory cache, I'm sure you don't need rules-of-thumb ;)

1. A software implemented VPN (IPSec or GRE) in a scale out architecture (vs dedicated hardware/appliance VPN)

2. As a front end to a perimeter reverse proxy/LB like Envoy (e.g. Lyft fronts Envoy with a Amazon ELB)

I'm assuming that you need sticky sessions for 1 but not for 2 (assuming policy is replicated across all Envoy proxies)?

#2 - It depends on how well-designed the rest of your application stack is. If you need session stickiness from reverse proxy to application server, then you also need session stickiness from front-end LB to reverse proxy.