Is Open vSwitch Control Plane In-Band or Out-of-Band?

A few days ago I described how most OpenFlow data center fabric solutions use out-of-band control plane (separate control-plane network). Can we do something similar when running OpenFlow switch (example: Open vSwitch) in a hypervisor host?

TL&DR answer: Sure we can. Does it make sense? It depends.

Open vSwitch supports in-band control plane, but that’s not the focus of this post.

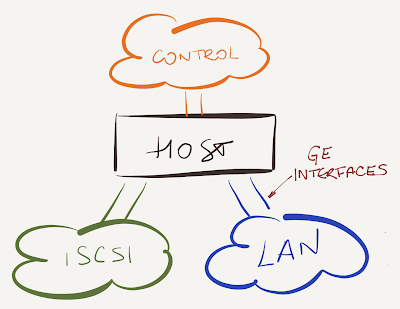

If you buy servers with a half dozen interfaces (I wouldn't), then it makes perfect sense to follow the usual design best practices published by hypervisor vendors, and allocate a pair of interfaces to user traffic, another pair to management/control plane/vMotion traffic, and a third pair to storage traffic. Problem solved.

Buying servers with two 10GE uplinks (what I would do) definitely makes your cabling friend happy, and reduces the overall networking costs, but does result in slightly more interesting hypervisor configuration.

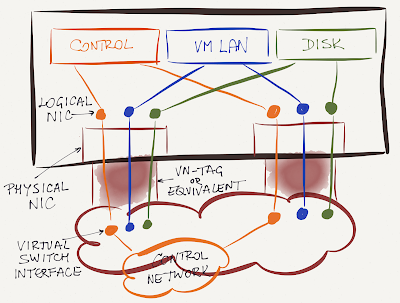

Best case, you split the 10GE uplinks into multiple virtual uplink NICs (example: Cisco/s Adapter FEX, Broadcom's NIC Embedded Switch, or SR-IOV) and transform the problem into a known problem (see above) … but what if you're stuck with two physical uplinks?

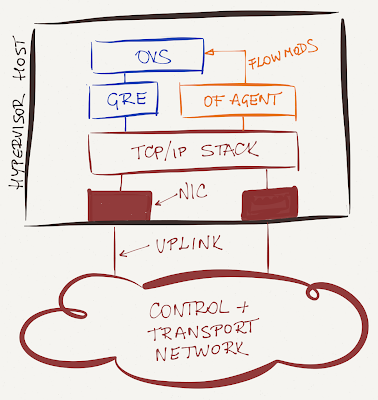

Overlay Virtual Networks to the Rescue

If you implement all virtual networks (used by a particular hypervisor host) with overlay virtual networking technology, you don't have a problem. The virtual switch in the hypervisor (for example, OVS) has no external connectivity; it just generates IP packets that have to be sent across the transport network. The uplinks are thus used for control-plane traffic and encapsulated user traffic - the OpenFlow switch is never touching the physical uplinks.

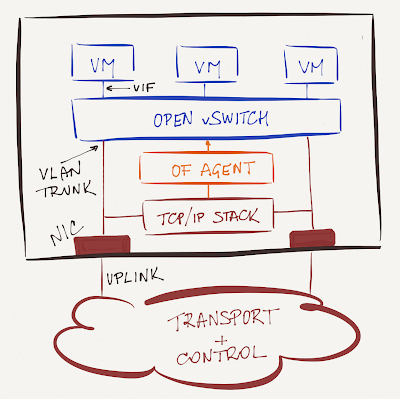

Integrating OpenFlow Switch with Physical Network

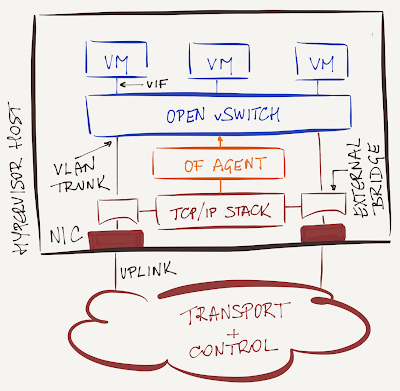

Finally, there's the scenario where an OpenFlow-based virtual switch (usually OVS) provides VLAN-based switching, and potentially interferes with control-plane traffic running over shared uplinks. Most products solve this challenge by somehow inserting the control-plane TCP stack in parallel with the OpenFlow switch.

For example, OVS Neutron agent creates a dedicated bridge for each uplink, and connects OVS uplinks and the host TCP/IP stack to the physical uplinks through the per-interface bridge. That setup ensures the control-plane traffic continues to flow even when a bug in Neutron agent or OVS breaks VM connectivity across OVS. For more details see OpenStack Networking in Too Much Detail blog post published on RedHat OpenStack site.

More Information

Visit SDN, Cloud or Virtualization resources on ipSpace.net, or get in touch if you need design or deployment advice.

1 comments: