Virtual switches need BPDU guard

An engineer attending my VMware Networking Deep Dive webinar has asked me a tough question that I was unable to answer:

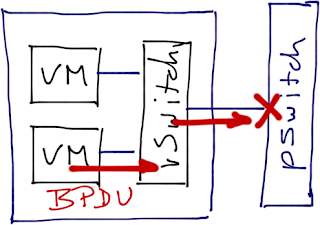

What happens if a VM running within a vSphere host sends a BPDU? Will it get dropped by the vSwitch or will it be sent to the physical switch (potentially triggering BPDU guard)?

I got the answer from visibly harassed Kurt (@networkjanitor) Bales during the Networking Tech Field Day; one of his customers has managed to do just that.

Update 2011-11-04: The post was rewritten based on extensive feedback from Cisco, VMware and numerous readers.

Here’s a sketchy overview of what was going on: they were running a Windows VM inside his VMware infrastructure, decided to configure bridging between a vNIC and a VPN link, and the VM started to send BPDUs through the vNIC. vSwitch ignored them, but the physical switch didn’t – it shut down the port, cutting a number of VMs off the network.

Best case, BPDU guard on the physical switch blocks but doesn’t shut down the port – all VMs pinned to that link get blackholed, but the damage stops there. More often BPDU guard shuts down the physical port (the reaction of BPDU guard is vendor/switch-specific), VMs using that port get pinned to another port, and the misconfigured VM triggers BPDU guard on yet another port, until the whole vSphere host is cut off from the rest of the network. Absolutely worst case, you’re running VMware High Availability, the vSphere host triggers isolation response, and the offending VM is restarted on another host (eventually bringing down the whole cluster).

There is only one good solution to this problem: implement BPDU guard on the virtual switch. Unfortunately, no virtual switch running in VMware environment implements BPDU guard.

Interestingly, the same functionality would be pretty easy to implement in Xen/XenServer/KVM; either by modifying the open-source Open vSwitch or by forwarding the BPDU frames to OpenFlow controller, which would then block the offending port.

Nexus 1000V seems to offer a viable alternative. It has an implicit BPDU filter (you cannot configure it) that would block the BPDUs coming from a VM, but that only hides the problem – you could still get forwarding loops if a VM bridges between two vNICs connected to the same LAN. However, you can reject forged transmits (source-MAC-based filter, a standard vSphere feature) to block bridged packets coming from a VM.

According to VMware’s documentation (ESX Configuration Guide) the default setting in vSphere 4.1 and vSphere 5 is to accept forged transmits.

Lacking Nexus 1000V, you can use a virtual firewall (example: vShield App) that can filter layer-2 packets based on ethertype. Yet again, you should combine that with rejection of forged transmits.

No BPDUs past this point

In theory, an interesting approach might be to use VM-FEX. A VM using VM-FEX is connected directly to a logical interface in the upstream switch and the BPDU guard configured on that interface would shut down just the offending VM. Unfortunately, I can’t find a way to configure BPDU guard in UCS Manager.

Other alternatives to BPDU guard in a vSwitch range from bad to worse:

Disable BPDU guard on the physical switch. You’ve just moved the problem from access to distribution layer (if you use BPDU guard there) ... or you’ve made the whole layer-2 domain totally unstable, as any VM could cause STP topology change.

Enable BPDU filter on the physical switch. Even worse – if someone actually manages to configure bridging between two vNICs (or two physical NICs in a Hyper-V host), you’re toast; BPDU filter causes the physical switch to pretend the problem doesn’t exist.

Enable BPDU filter on the physical switch and reject forged transmits in vSwitch. This one protects against bridging within a VM, but not against physical server misconfiguration. If you’re absolutely utterly positive all your physical servers are vSphere hosts, you can use this approach (vSwitch has built-in loop prevention); if there’s a minimal chance someone might connect bare-metal server or a Hyper-V/XenServer host to your network, don’t even think about using BPDU filter on the physical switch.

Anything else? Please describe your preferred solution in the comments!

Summary

BPDU filter available in Nexus 1000V or ethertype-based filters available in virtual firewalls can stop the BPDUs within the vSphere host (and thus protect the physical links). If you combine it with forged transmit rejection, you get a solution that protects the network from almost any VM-level misconfiguration.

However, I would still prefer a proper BPDU guard with logging in the virtual switches for several reasons:

- BPDU filter just masks the configuration problem;

- If the vSwitch accepts forged transmits, you could get an actual forwarding loop;

- While the solution described above does protect the network, it also makes troubleshooting a lot more obscure – a clear logging message in vCenter telling the virtualization admin that BPDU guard has shut down a vNIC would be way better;

- Last but definitely not least, someone just might decide to change the settings and accept forged transmits (with potentially disastrous results) while troubleshooting a customer problem @ 2AM.

Looking for more details?

You’ll find them in the VMware Networking Deep Dive webinar (buy a recording); if you’re interested in more than one webinar, consider the yearly subscription.

Use some cheap mpls switches (so !cisco) to build a multi path routed core and run vlps/vll over that as required.

According to this (http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps9902/guide_c07-556626.html)

"Because the Cisco Nexus 1000V Series does not participate in Spanning Tree Protocol, it does not respond to Bridge Protocol Data Unit (BPDU) packets, nor does it generate them. BPDU packets that are received by Cisco Nexus 1000V Series Switches are dropped."

The way I'm reading that (and they have a nifty diagram above) is bpdu packets are dropped and not forwarded on to the physical switch.

Not sure if Kurt was running the 1000v or not...if this is the case though at the very least Cisco needs to clear this up.

While you could pre populate blessed macs at provisioning time in a hosted VM environment. I doubt that would fly in the enterprise arena.

Disclaimer: I am a Cisco SE.

There's still the risk that VM <-> VM can loop traffic between themselves, but this is no difference than two physical servers doing the same.

I would rephrase the title to "Virtual switches should prevent VMs from impacting the network topology". BPDU guard is just one way to do so. Nice to bring this into discussion though.

#2 - BPDU filter @ NX1KV does not prevent forwarding loops through two VMs. While being pretty hard to configure, they could happen.

The missing bit (maybe I should include that as well) is that Kurt runs an IaaS environment and has no control over stupidities his customers want to make; he has to protect his network.

There is a something you can do with VM-FEX though - configure BPDU guard on Nexus 6100. Will insert that in the article.

Actually, I've seen a MSCE configuring bridging between two Hyper-V interfaces in a bad-hair moment. Result: total network meltdown (yeah, BPDU guard was disabled and something probably filtered BPDUs).

In both cases, BPDUs would be dropped before they would hit the physical link. You'd still need "reject forged transmits" to prevent forwarding loops.

Going over all possible solutions, there is nothing that will cover every possible situation. Know your environment and act accordingly?

Really, it's no different to a server just maxing out it's interface by pulling random data from another source on the network.

In a typical public cloud environment I don't think this would be a problem since your customers should most likely have only a menu based system for ordering VMs, and not direct access to vCenter. Although you still have the sysadmin issue. :)

The basic rule of thumb is you can drop BPDUs only in a guaranteed loopfree topology...

The primary use case is that virtual machines are not Ethernet bridges, so the current implementation works well for the common use case. However, where there are advanced use cases like this where VM is a bridge running STP - the admin has to do a little more, like either disable bridge code in Windows via registry (too complex and doesn't work in Cloud environments since management can be delegated but viable in non-Cloud use cases) or use products from VMware and partners to drop the BPDUs. For example, vShield App has a L2 firewall capability - you can drop all BPDUs sent by VMs at will with a point of enforcement being on every vNIC of VMs on the ESX host. If you do that, you have to create a loopfree topology.

Will VMware do something interesting in vSwitch top to address this? Stay tuned, Ivan would be the first to know :-)

Great rewrite on the article, by the way, offers much more visibility into the challenges and possible solutions. Kudos.

#2- That forwarding loop between VMs cannot come from the outside, as Nexus 1000v will drop local source MAC address frames on ingress. It must happen another way.

#2 - You're almost right, but for a wrong reason ;) A bridging VM would forward an externally-generated broadcast/multicast. To stop that, you have to use "reject forged transmits" to catch a flooded packet existing the VM.

By default, the N1K performs a deja vu check on packets received from uplinks, preventing any loops.

I'd recommend configuring BPDU-filter and disable BPDU-guard on the upstream switch. In order to retain the portfast mode for the ports facing the ESX, it’s also important to set these parameters on a per switch port basis – not globally, which has the behavior of moving the switch port out of portfast mode once a BPDU is received…

Carlos and I did some testing on a 6506 w/ fairly recent IOS code:

PRME-BL-6506-J06#show run interface g1/31

Building configuration...

Current configuration : 178 bytes

!

interface GigabitEthernet1/31

switchport

switchport mode access

no ip address

spanning-tree portfast

spanning-tree bpdufilter enable

spanning-tree bpduguard disable

end

Before doing this, we’d see the BPDU guard kick in:

PRME-BL-6506-J06#show log | i 1/31

6w2d: %SPANTREE-SP-2-BLOCK_BPDUGUARD: Received BPDU on port GigabitEthernet1/31 with BPDU Guard enabled. Disabling port.

6w2d: %PM-SP-4-ERR_DISABLE: bpduguard error detected on Gi1/31, putting Gi1/31 in err-disable state

PRME-BL-6506-J06#

Then we went to the configuration show above w/ portfast enabled, filter enabled, guard disabled and the port moved from blocking to forwarding as per portfast definition and BDPU counters are at zero… The key thing is to do this on a per-port basis and not globally.

PRME-BL-6506-J06#show spanning-tree int g1/31 portfast

VLAN0001 enabled

PRME-BL-6506-J06#show spanning-tree int g1/31 detail

Port 31 (GigabitEthernet1/31) of VLAN0001 is forwarding

Port path cost 4, Port priority 128, Port Identifier 128.31.

Designated root has priority 0, address 0004.961e.6f70

Designated bridge has priority 32769, address 0007.8478.8c00

Designated port id is 128.31, designated path cost 14

Timers: message age 0, forward delay 0, hold 0

Number of transitions to forwarding state: 1

The port is in the portfast mode

Link type is point-to-point by default

Bpdu filter is enabled

BPDU: sent 0, received 0

I like some of vGW's (Juniper's host firewall solution) but at this time it can't block BPDUs or improperly tagged frames...

Assumption: vSwitch truly provides loop-free forwarding as Ivan has mentioned/proven in other blog posts. (with the prevent forged option enabled)

BPDUfiltering, enabled globally, filters outbound BPDUs on all portfast/edge ports. It also sends "a few" at link up to prevent STP race conditions and an ugly loop as a result. If it does receive BPDUs, it causes the port to fall out of portfast/edge mode.

So, if a vNIC in ESX is bridged, the BPDUs never leave the pSwitch after the initial linkup to cause them to loop around in the vSwitch and reflected back to the pSwitch. This way, you get the following benefits:

a) One bad VM won't trigger BPDUguard, thereby isolating a hypervisor (or a cluster in Ivan's example)

b) A miscabled host attached to a pSwitch port will fall out of portfast mode if BPDUs are seen from it. Protecting you from a bad sysadmin/cable-job with a non-loopfree (non vSwitch) looping the network.

Basically, enabling BPDUfilter globally circumvents Ivan's concern in the above blog about a non-ESXi being plugged into the network causing a loop. If one of these does get plugged in and has a loop, the switch will see the BPDUs at link up and move the ports out of portfast/edge.

The only situation where this breaks down is a non-ESX hypervisor that has a vswitch that does not guarantee loop-free. In this situation, the pSwitch sees the uplinks as up and online, so no BPDUs have been sent in awhile, so a loop in the non-ESX vSwitch could be a devastating take down of your datacenter. Then, I'm afraid, storm-control is your only friend.

Outside of my Virtual-facing pSwitches, I would never do BPDUfilter, even globally....BPDUguard is a must for the rest of my network.

so what happen's if we use L2 Filters on the phy port incoming to drop all bpdu packets ?

e.g.

mac access-list extended BPDU

! deny IEEE STP

deny any any lsap 0x4242 0x0

! deny CISCO RSTP

deny any any lsap 0xAAAA 0x0

permit any any

int g0/1

mac access-list BPDU in

IMHO this would drop incoming frames whith bpdu's

But maybe im wrong

http://rickardnobel.se/archives/1461