BGP Route Replication in MPLS/VPN PE-routers

Whenever I’m explaining MPLS/VPN technology, I recommend using the same route targets (RT) and route distinguishers (RD) in all VRFs belonging to the same simple VPN. The Single RD per VPN recommendation doesn’t work well for multi-homed sites, so one might wonder whether it would be better to use a different RD in every VRF. The RD-per-VRF design also works, but results in significantly increased memory usage on PE-routers.

Sample Network

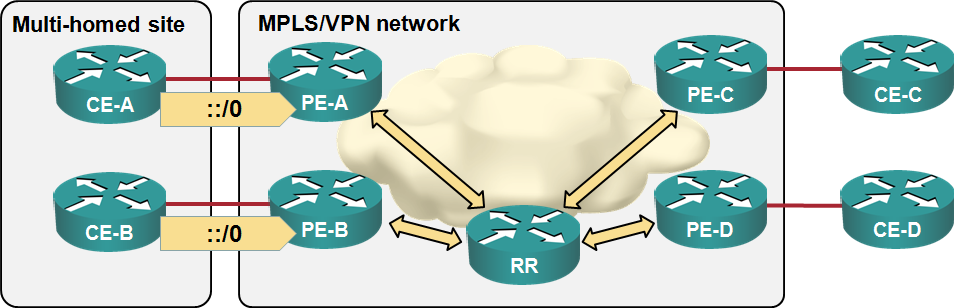

I’ll illustrate the problem using the same network I used in MPLS/VPN load balancing post:

Sample network

Yet again, CE-A and CE-B are advertising BGP default route to PE-A and PE-B, but this time, PE-A, PE-B and PE-C use different route distinguishers in their VRF configurations:

VRF configuration on PE-A

ip vrf Customer

rd 65000:101

route-target export 65000:101

route-target import 65000:101

VRF configuration on PE-B

ip vrf Customer

rd 65000:1101

route-target export 65000:101

route-target import 65000:101

VRF and BGP configuration on PE-C

ip vrf Customer

rd 65000:102

route-target export 65000:101

route-target import 65000:101

!

router bgp 65000

!

address-family ipv4 vrf Customer

no synchronization

maximum-paths ibgp 4 import 4

exit-address-family

The Results

When the network reaches a stable state, PE-C should have two default routes in its BGP table, one from PE-A (with RD 65000:101) and one from PE-B (with RD 65000:1101).

Now let’s inspect the BGP table on PE-C:

PE-C#show bgp vpnv4 unicast all

[...edited...]

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 65000:101

*>i0.0.0.0 172.16.0.1 0 100 0 65100 i

Route Distinguisher: 65000:102 (default for vrf Customer)

* i0.0.0.0 172.16.0.2 0 100 0 65100 i

*>i 172.16.0.1 0 100 0 65100 i

Route Distinguisher: 65000:1101

*>i0.0.0.0 172.16.0.2 0 100 0 65100 i

For whatever weird reason, the BGP table has four copies of the default route, not two. Let’s inspect these four entries in more details:

PE-C#show bgp vpnv4 unicast all 0.0.0.0

BGP routing table entry for 65000:101:0.0.0.0/0, version 70

Paths: (1 available, best #1, no table)

Flag: 0x820

Not advertised to any peer

65100

172.16.0.1 (metric 129) from 172.16.0.5 (172.16.0.5)

Origin IGP, metric 0, localpref 100, valid, internal, best

Extended Community: RT:65000:101

Originator: 172.16.0.1, Cluster list: 172.16.0.5

mpls labels in/out nolabel/37

BGP routing table entry for 65000:102:0.0.0.0/0, version 106

Paths: (2 available, best #2, table Customer)

Multipath: eBGP

Flag: 0x820

Not advertised to any peer

65100, imported path from 65000:1101:0.0.0.0/0

172.16.0.2 (metric 129) from 172.16.0.5 (172.16.0.5)

Origin IGP, metric 0, localpref 100, valid, internal, multipath

Extended Community: RT:65000:101

Originator: 172.16.0.2, Cluster list: 172.16.0.5

mpls labels in/out nolabel/30

65100, imported path from 65000:101:0.0.0.0/0

172.16.0.1 (metric 129) from 172.16.0.5 (172.16.0.5)

Origin IGP, metric 0, localpref 100, valid, internal, multipath, best

Extended Community: RT:65000:101

Originator: 172.16.0.1, Cluster list: 172.16.0.5

mpls labels in/out nolabel/37

BGP routing table entry for 65000:1101:0.0.0.0/0, version 69

Paths: (1 available, best #1, no table)

Flag: 0x820

Not advertised to any peer

65100

172.16.0.2 (metric 129) from 172.16.0.5 (172.16.0.5)

Origin IGP, metric 0, localpref 100, valid, internal, best

Extended Community: RT:65000:101

Originator: 172.16.0.2, Cluster list: 172.16.0.5

mpls labels in/out nolabel/30

On top of the two routes received from PE-A and PE-B, there are two almost identical routes with RD 65000:102 marked imported path. Weird, isn’t it?

What’s Going On?

You probably know the basic principles of MPLS/VPN and BGP route selection (read my MPLS/VPN books or watch my Enterprise MPLS/VPN webinar if you need more details). Best MPLS/VPN routes are selected using (approximately) this algorithm:

- BGP routing process performs best path selection in the VPNv4 table using the standard set of BGP path selection rules.

- The IPv4 parts of the best-path VPNv4 prefixes with the route targets matching local VRFs are inserted into the VRF routing tables (where they compete with routes learned through per-VRF routing protocols based on their administrative distance);

- Per-VRF FIB is built from the VRF routing table (more details in RIBs and FIBs)

The first step in this process (BGP best path selection) cannot work correctly if the prefixes in VPNv4 table are not comparable. Remember: we have to compare the whole VPNv4 prefix as different customers might have overlapping address spaces.

The BGP process thus has to make local copies of those BGP paths that have RDs different from the local RD value to make them comparable to local BGP paths (for example, routes received from a CE-router through an EBGP session). The BGP paths received from other PE-routers are imported (BGP process creates a copy with the local value of the RD) and then used in the BGP best path selection process.

Is This Bad?

As always, the answer is “It depends.” If you have an occasional route distinguisher mismatch, you’ll slightly increase your memory consumption. But if you’d go to the extreme and use a different VRF and RD for every site connected to a PE-router, the overhead of the imported BGP routes might become significant.

Let’s continue with our example and add another VRF on PE-C with the same RT but a different RD:

ip vrf Site-B

rd 65000:103

route-target export 65000:101

route-target import 65000:101

As expected, PE-C creates another copy of default routes received from PE-A and PE-B, further increasing BGP memory consumption:

PE-C#show bgp vpnv4 unicast all

[...edited...]

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 65000:101

*>i0.0.0.0 172.16.0.1 0 100 0 65100 i

Route Distinguisher: 65000:102 (default for vrf Customer)

* i0.0.0.0 172.16.0.2 0 100 0 65100 i

*>i 172.16.0.1 0 100 0 65100 i

Route Distinguisher: 65000:103 (default for vrf Site-B)

* i0.0.0.0 172.16.0.2 0 100 0 65100 i

*>i 172.16.0.1 0 100 0 65100 i

Route Distinguisher: 65000:1101

*>i0.0.0.0 172.16.0.2 0 100 0 65100 i

Summary

If you offer a simple VPN service, the use a single RD and RT value for a simple VPN is still be best advice I can give you. If you plan to support multipath load sharing or fast failover, the per-PE-per-VRF RD is one way to get it working, or you could use BGP Add Paths functionality if your platform supports it for VPNv4 prefixes.

Revision History

- 2012-07-24

- Updated the conclusions based on feedback from nosx

- 2023-01-18

- Point out the alternative: BGP Add Paths

Need Help?

- The basics of MPLS/VPN technology are described in the MPLS Essentials webinar (available with free subscription)

- MPLS/VPN enterprise use cases are described in the Enterprise MPLS/VPN Deployments webinar (part of standard subscription).

- We discussed the impact of using this trick with Internet in a VRF in December 2021 Design Clinic.

The ability to ensure that multiple or alternate paths via different originating PE's in the same VPN are made available to the far end PE is beneficial enough to outweigh the need for additional memory. Its not as if we cant always add hundreds of gigs of ram to route reflectors, the biggest limitation in most modern carrier networks is the size of the average devices hardware forwarding table (TCAM in most devices)

BTW, per-PE-per-VRF RDs won't cause a memory hit on route reflectors (they have to store all prefixes anyway, unless you use a RR hierarchy), but on the PE-routers, where the BGP memory consumption will probably increase by around 80%.

One thing is not clear to me regarding actual import of route from BGP VPNv4 RIB to VRF RIB. If RT import statement is removed from VRF config than we can not see any more particular route in VRF RIB ant that is clear, because there is no matching RT inside of VRF and we can not import VPNv4 route. But in my simulation when RT import is removed from config also i cant see that same route in BGP VPNv4 RIB. Why? Is this VPNv4 route rejected by BGP VPNv4 as well? I tought that VPNv4 route is maintained in BGP VPNv4 RIB and after RT import is applied then moves to VRF RIB, but it looks aparently that BGP VPNv4 table is discarding this route without RT as well?!

Thanks for comments

Pretty sure this is 100% accurate. We also experience no imports even if the VRF has an RT import statement, but no interfaces are assigned to that VRF.

That may be default behavior or it may be that we use RTC as well, I could be wrong!

I know this is an old thread but I wanna say a huge thank you to the site owner for these explanations. You've made understanding MPVPN nuances soooo much easier and I can't thank you enough!

Thanks a million! Made my day ;)

Ivan, As per Christian’s comments, and having read this “a few times” 😃- it’s wonderfully informative and makes sense - thanks again Rich