CloudSwitch – VLAN extension done right

I’ve first heard about CloudSwitch when writing about vCider. It seemed like an interesting idea and I wanted to explore the networking aspects of cloud VLAN extension for my EuroNOG presentation. My usual approach (read the documentation) failed – the documentation is not available on their web site – but I got something better: a briefing from Damon Miller, their Director of Technical Field Operations. So, this is how I understood CloudSwitch works (did I get it wrong? Write a comment!):

- It’s tightly integrated with VMware and vCenter;

- It allows you to move a virtual machine from vSphere environment into IaaS cloud (Amazon EC2 or Terramark);

- The virtual disks associated with the migrated VM are also moved into the cloud and encrypted;

- The VM migrated into the cloud retains LAN connectivity with your home network, giving you almost seamless workload mobility (no, you can’t move running VMs, stop asking for unicorn tears).

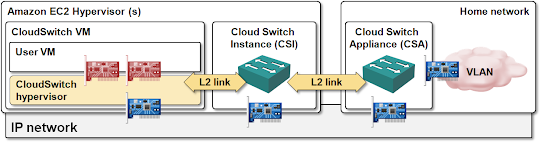

The architecture supporting the cloud-to-DC VLAN connectivity is by necessity a bit convoluted, here’s my attempt at making sense of it:

CloudSwitch has to deploy a wrapper around user VM running in IaaS environment to present the same interfaces (working with the same device drivers) as vSphere to the user VM.

CloudSwitch uses nested hypervisors (its hypervisors run as VM instances in EC2); the CloudSwitch hypervisors intercept all disk and network requests made by the user VM. Disk requests are encrypted and written to cloud-based storage; Ethernet frames generated by the user VM’s vNIC are also encrypted and forwarded in UDP packets to a Cloud Switch Instance (CSI) – a VM acting as a bridge (for cloud-based VMs) and VPN termination point (for the cloud-to-DC link).

Each CSI handles a single VLAN; if you need more than one logical segment in the cloud, you have to deploy multiple CSIs.

The network virtualization provided by CloudSwitch hypervisor can alleviate networking limitations imposed by IaaS providers; for example, you can have as many NICs as you wish in your VM (Amazon EC2 gives you a single NIC per VM).

The CSI’s counterpart sitting in your data center is the Cloud Switch Appliance (CSA) – a VM that controls the CloudSwitch environment and provides connectivity for up to four VLANs. CSA has two components – the control/management component and the data path component (CloudSwitch DataPath – CSD).

The CSA’s management component establishes VPN tunnels with the cloud-based CSIs, the CSD bridges data between individual VM NICs and the VPN tunnels. CSD uses standard VMware NICs and can thus connect a VLAN or any other port group construct with the cloud-based virtual segment.

CSD probably uses promiscuous mode on its vNICs to receive all the data that has to be bridged, which means that it wouldn’t work with vCDNI-based port groups. VXLAN just might work because it doesn’t use VMsafe Network API like vCDNI does.

So far, CloudSwitch sounds like any other bridging solution, but there are a few extras:

Fragmentation. CloudSwitch does not rely on jumbo frames or other mechanisms (like IP Multicast) that might not work over the Internet. It automatically fragments and reassembles the Ethernet frames.

Embedded encryption. I know you can always add IPsec to your VPLS-over-MPLS-over-GRE-over-IP stack, but it’s nice if you don’t have to worry about it. CloudSwitch uses 128-bit Blowfish with hourly key rotation by default; you can configure AES or 256-bit keys.

Scalable architecture. CSI VM controls a single LAN; you can deploy as many CSIs as needed. CSA can control four VLANs, but you can also deploy additional CSD virtual machines controlled by the same CSA.

The fragmentation and software encryption take their toll – the maximum throughput you can expect from a single CSA/CSD VM is around 500 Mbps. Obviously, the CSA/CSD architecture allows you to add additional VMs (and CPU cores) if needed.

No spanning tree. CloudSwitch uses IP transport with all related benefits (including fast convergence and equal-cost multipath). Loops cannot form within a single CloudSwitch-extended VLAN, so there’s no need to run Spanning Tree Protocol.

Obviously, a highly creative individual might create a loop by bridging vNICs in two multi-NIC virtual machines, but it’s quite impossible to devise a technical solution to deal with such creativity.

Traffic trombones. If you have some of your virtual machines in your data center, the others in the cloud, and they think they’re in the same LAN, traffic trombones will inevitably occur. You could split the two groups into two subnets (see below) and/or use the firewall/load balancer appliance supplied with CloudSwitch solution. It’s a VM that runs in the public cloud, interfaces with the Internet, and provides inbound firewalling and load balancing. It can also use source NAT (SNAT) to ensure the return traffic exits through the load balancer and not through the default gateway which might reside in your data center.

MAC address awareness. CSA knows exactly which MAC addresses reside in the cloud (CloudSwitch is tightly integrated with vCenter and the CloudSwitch hypervisor controls cloud-based user VMs). It can thus limit the flooding of unknown unicasts, much like OTV. It can also use MAC address awareness to stop source MAC spoofing.

Last but definitely not least, if you’re worried about broadcast storms being propagated from your local VLANs into the cloud, you can always insert a router between your data center and the CSA/CSD. Personally, I would treat the virtual machines running in the cloud as a different security zone and put the CSA VM in its own firewalled segment.

Summary

I’m not sure about the general viability of cloudbursting (admittedly there are some interesting use cases), but if you have to do it, CloudSwitch is as good as it gets today. Moving a tested VM from the vSphere environment into Amazon EC2 with a few clicks and no manual conversions does have its appeal ... and the networking part of the solution is probably as good as it gets (or, to stay true to my snarky self, the least awful I’ve seen so far).

The realities of physics (including speed of light, serialization and forwarding delays) obviously still apply – just because two VMs think they are in the same VLAN doesn’t mean they’ll experience the same latency they would on a LAN. Some applications might be too jittery to be able to run in a CloudSwitch environment, and you might experience severe performance drops in latency-sensitive applications that use too many RPCs (or equivalent request-response operations).

Oh, and there’s an excellent educational use case for CloudSwitch: whenever the server admins drop by with the futuristic unlimited inter-DC workload mobility ideas, just help them move some VMs into the cloud and watch their reactions to the inevitable performance drop.

Related information

You’ll find big-picture perspective as well as in-depth discussions of various data center and network virtualization technologies in my webinars: Data Center 3.0 for Networking Engineers (recording) and VMware Networking Deep Dive (recording). Both webinars are also available as part of the yearly subscription and Data Center Trilogy.