Cloud-as-an-Appliance Design

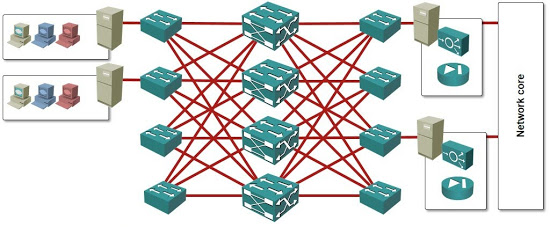

The original idea behind cloud-as-an-appliance design came from Brad Hedlund’s blog post in which he described how he’d build a greenfield Hadoop or private cloud cluster with servers connected to a Clos fabric. Throw virtual appliances into the mix and you get an extremely simple and versatile architecture:

Here are the basic design principles:

- Build a leaf-and-spine fabric;

- Connect servers to the leaf switches;

- Dedicate a small cluster of servers to virtual appliances (firewalls and load balancers);

Obviously I handwaved over numerous details, including server and access link sizing, redundant server connectivity, separation (or not) between network and storage fabrics and oversubscription ratios … but you get the basic idea. Let’s focus on the really interesting part.

External connectivity

In the above design I’ve inserted a dedicated appliances cluster between the leaf-and-spine fabric and the network core. A dedicated cluster solves several interesting problems:

- It removes most of the security audit concerns. It’s debatable how safe it is to run virtual appliances (especially firewalls) on the same physical hosts as user VMs, but if the virtual firewalls are the only workload running on a particular physical host, it’s hard to argue how that would be any less secure than the same firewall software running on a reassuringly expensive hardware appliance.

- It provides almost perfect isolation. Add external-facing NICs in the hosts running the virtual appliances, connect those NICs to the network core, and you have a design that’s identical to the traditional firewall-based design (the only exception being the hardware used to run the firewalling software).

- Virtual appliances have different resource needs than regular VMs. Regular VMs usually have (relatively) high memory requirements, and lower CPU/network requirements. Virtual appliances don’t use much memory, but need plenty of I/O bandwidth and CPU cores. The servers in the appliance cluster should thus be dimensioned differently than the other servers in the private cloud you’re building.

- Appliance cluster could use a different virtualization technology. Some appliance vendors started supporting Linux containers (Riverbed) that significantly minimize the per-appliance memory footprint, or dedicated software running on generic x86 hardware (Linerate Systems, now F5). Some open-source cloud orchestration platforms (ex: CloudStack) support Linux containers, allowing you to build a system with an optimal mix of virtualization platforms.

A dedicated appliance cluster connecting the private cloud infrastructure with the outside world makes most sense when you’re deploying complex applications that need firewall-based protection and external load balancing. If most of your workload consists of single-VM apps (what I called SMB LAMP stack), you’d be better off using private VLANs on the leaf switches and L3 forwarding on the spine switches.

Going back to your example -

In an Enterprise Private Cloud, your cluster of network services VMs would be per-tenant or per-app as an appliance rather than a cloud as an appliance, right?

Question usually after that, do you still want and need the DC-wide FW for a broad level of protection - this would be the cloud as an appliance as you describe. Is this correct? Just want to clarify.

Either way, in reality, you could end up with two-tiers of virtualized network services, but both should have dedicated compute clusters. What do you think?

The outermost tier still needs greater throughput b/c it would be an aggregation device...a basic HW box may still make sense in the short term due to Layers 8-10, but we all know solutions exist where virtual could be optimized to achieve the throughput far beyond what WAN and Internet connection speeds are (at least in the Enterprise).

-Jason